1.Prerequisites

1.Prerequisites1.1 Configure single nodes

Use my tutorial "Running Hadoop with HBase On CentOS Linux (Single-Node Cluster)"

1.2 SSH public access

Add mapping to /etc/hosts for each your nodes:

10.1.0.55 master

10.1.0.56 slave

To do that you need to add public key from master to slave ~/.ssh/authorized_keys and via verse, so eventually tou should be able to do the following:

[root@37 /usr/local/bin/hbase]ssh master

The authenticity of host 'master (10.1.0.55)' can't be established.

RSA key fingerprint is 09:e2:73:ac:6f:42:d1:da:13:20:76:10:36:29:c4:62.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'master,10.1.0.55' (RSA) to the list of known hosts.

Last login: Thu Dec 25 07:19:16 2008 from slave

[root@37 ~]exit

logout

Connection to master closed.

[root@37 /usr/local/bin/hbase]ssh slave

The authenticity of host 'slave (10.1.0.56)' can't be established.

RSA key fingerprint is 5c:4f:81:19:ad:f3:78:02:ce:64:f1:67:10:ca:c5:b8.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'slave,10.1.0.56' (RSA) to the list of known hosts.

Last login: Thu Dec 25 07:16:47 2008 from 38.d.de.static.xlhost.com

[root@38 ~]exit

logout

Connection to slave closed.

2.Cluster Overview

The master node will also act as a slave because we only have two machines available in our cluster but still want to spread data storage and processing to multiple machines.

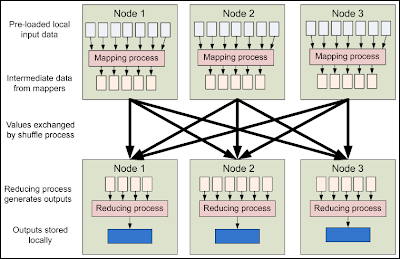

The master node will run the "master" daemons for each layer: namenode for the HDFS storage layer, and jobtracker for the MapReduce processing layer. Both machines will run the "slave" daemons: datanode for the HDFS layer, and tasktracker for MapReduce processing layer. Basically, the "master" daemons are responsible for coordination and management of the "slave" daemons while the latter will do the actual data storage and data processing work.

3. Hadoop core Configuration

3.1 conf/masters (master only)

The conf/masters file defines the master nodes of our multi-node cluster.

On master, update $HADOOP_HOME/conf/masters that it looks like this:

master

3.2 conf/slaves (master only)

This conf/slaves file lists the hosts, one per line, where the Hadoop slave daemons (datanodes and tasktrackers) will run. We want both the master box and the slave box to act as Hadoop slaves because we want both of them to store and process data.

On master, update $HADOOP_HOME/conf/slaves that it looks like this:

master

slave

If you have additional slave nodes, just add them to the conf/slaves file, one per line (do this on all machines in the cluster).

master

slave

slave01

slave02

slave03

3.3 conf/hadoop-site.xml (all machines)

Following a sample configuration for all hosts, explanation of all parameters linkhadoop.tmp.dir /usr/local/bin/hadoop/datastore/hadoop-${user.name} A base for other temporary directories. fs.default.name hdfs://master:54310 The name of the default file system. A URI whose

scheme and authority determine the FileSystem implementation. The

uri's scheme determines the config property (fs.SCHEME.impl) naming

the FileSystem implementation class. The uri's authority is used to

determine the host, port, etc. for a filesystem.mapred.job.tracker master:54311 The host and port that the MapReduce job tracker runs

at. If "local", then jobs are run in-process as a single map

and reduce task.dfs.replication 1 Default block replication.

The actual number of replications can be specified when the file is created.

The default is used if replication is not specified in create time.mapred.reduce.tasks 8 The default number of reduce tasks per job. Typically set

to a prime close to the number of available hosts. Ignored when

mapred.job.tracker is "local".mapred.tasktracker.reduce.tasks.maximum 8 The maximum number of reduce tasks that will be run

simultaneously by a task tracker.mapred.child.java.opts -Xmx500m Java opts for the task tracker child processes.

The following symbol, if present, will be interpolated: @taskid@ is replaced

by current TaskID. Any other occurrences of '@' will go unchanged.

For example, to enable verbose gc logging to a file named for the taskid in

/tmp and to set the heap maximum to be a gigabyte, pass a 'value' of:

-Xmx1024m -verbose:gc -Xloggc:/tmp/@taskid@.gc

The configuration variable mapred.child.ulimit can be used to control the

maximum virtual memory of the child processes.

Very important: now format each node data storage before proceeding.

3.4 Start/Stop Hadoop cluster

HDFS daemons: start/stop namenode command bin/start-dfs.sh or bin/stop-dfs.sh

MapReduce daemons: run the command $HADOOP_HOME/bin/start-mapred.sh on the machine you want the jobtracker to run on. This will bring up the MapReduce cluster with the jobtracker running on the machine you ran the previous command on, and tasktrackers on the machines listed in the conf/slaves file.

[root@37 /usr/local/bin/hadoop]bin/start-dfs.sh

starting namenode, logging to /usr/local/bin/hadoop/bin/../logs/hadoop-root-namenode-37.c3.33.static.xlhost.com.out

master: starting datanode, logging to /usr/local/bin/hadoop/bin/../logs/hadoop-root-datanode-37.c3.33.static.xlhost.com.out

slave: starting datanode, logging to /usr/local/bin/hadoop/bin/../logs/hadoop-root-datanode-38.c3.33.static.xlhost.com.out

master: starting secondarynamenode, logging to /usr/local/bin/hadoop/bin/../logs/hadoop-root-secondarynamenode-37.c3.33.static.xlhost.com.out

[root@37 /usr/local/bin/hadoop]bin/start-mapred.sh

starting jobtracker, logging to /usr/local/bin/hadoop/bin/../logs/hadoop-root-jobtracker-37.c3.33.static.xlhost.com.out

master: starting tasktracker, logging to /usr/local/bin/hadoop/bin/../logs/hadoop-root-tasktracker-37.c3.33.static.xlhost.com.out

slave: starting tasktracker, logging to /usr/local/bin/hadoop/bin/../logs/hadoop-root-tasktracker-38.c3.33.static.xlhost.com.out

[root@37 /usr/local/bin/hadoop]jps]

-bash: jps]: command not found

[root@37 /usr/local/bin/hadoop]jps

28638 DataNode

29052 Jps

28527 NameNode

28764 SecondaryNameNode

28847 JobTracker

28962 TaskTracker

[root@37 /usr/local/bin/hadoop]bin/hadoop dfs -copyFromLocal LICENSE.txt testWordCount

[root@37 /usr/local/bin/hadoop]bin/hadoop dfs -ls

Found 1 items

-rw-r--r-- 1 root supergroup 11358 2008-12-25 08:20 /user/root/testWordCount

[root@37 /usr/local/bin/hadoop]bin/hadoop jar hadoop-0.18.2-examples.jar wordcount testWordCount testWordCount-out

08/12/25 08:21:46 INFO mapred.FileInputFormat: Total input paths to process : 1

08/12/25 08:21:46 INFO mapred.FileInputFormat: Total input paths to process : 1

08/12/25 08:21:47 INFO mapred.JobClient: Running job: job_200812250818_0001

08/12/25 08:21:48 INFO mapred.JobClient: map 0% reduce 0%

08/12/25 08:21:53 INFO mapred.JobClient: map 50% reduce 0%

08/12/25 08:21:55 INFO mapred.JobClient: map 100% reduce 0%

08/12/25 08:22:01 INFO mapred.JobClient: map 100% reduce 12%

08/12/25 08:22:02 INFO mapred.JobClient: map 100% reduce 25%

08/12/25 08:22:05 INFO mapred.JobClient: map 100% reduce 41%

08/12/25 08:22:09 INFO mapred.JobClient: map 100% reduce 52%

08/12/25 08:22:14 INFO mapred.JobClient: map 100% reduce 64%

08/12/25 08:22:19 INFO mapred.JobClient: map 100% reduce 66%

08/12/25 08:22:24 INFO mapred.JobClient: map 100% reduce 77%

08/12/25 08:22:29 INFO mapred.JobClient: map 100% reduce 79%

08/12/25 08:25:30 INFO mapred.JobClient: map 100% reduce 89%

08/12/25 08:26:12 INFO mapred.JobClient: Job complete: job_200812250818_0001

08/12/25 08:26:12 INFO mapred.JobClient: Counters: 17

08/12/25 08:26:12 INFO mapred.JobClient: Job Counters

08/12/25 08:26:12 INFO mapred.JobClient: Data-local map tasks=1

08/12/25 08:26:12 INFO mapred.JobClient: Launched reduce tasks=9

08/12/25 08:26:12 INFO mapred.JobClient: Launched map tasks=2

08/12/25 08:26:12 INFO mapred.JobClient: Rack-local map tasks=1

08/12/25 08:26:12 INFO mapred.JobClient: Map-Reduce Framework

08/12/25 08:26:12 INFO mapred.JobClient: Map output records=1581

08/12/25 08:26:12 INFO mapred.JobClient: Reduce input records=593

08/12/25 08:26:12 INFO mapred.JobClient: Map output bytes=16546

08/12/25 08:26:12 INFO mapred.JobClient: Map input records=202

08/12/25 08:26:12 INFO mapred.JobClient: Combine output records=1292

08/12/25 08:26:12 INFO mapred.JobClient: Map input bytes=11358

08/12/25 08:26:12 INFO mapred.JobClient: Combine input records=2280

08/12/25 08:26:12 INFO mapred.JobClient: Reduce input groups=593

08/12/25 08:26:12 INFO mapred.JobClient: Reduce output records=593

08/12/25 08:26:12 INFO mapred.JobClient: File Systems

08/12/25 08:26:12 INFO mapred.JobClient: HDFS bytes written=6117

08/12/25 08:26:12 INFO mapred.JobClient: Local bytes written=19010

08/12/25 08:26:12 INFO mapred.JobClient: HDFS bytes read=13872

08/12/25 08:26:12 INFO mapred.JobClient: Local bytes read=8620

4. HBase configuration

4.1 $HBASE_HOME/hbase-site.sh

hbase.rootdir hdfs://master:54310/hbase The directory shared by region servers. hbase.master master:60000 The host and port that the HBase master runs at.

4.2 $HBASE_HOME/conf/regionservers

master

slave

4.3 Start HBase on master

Use command $HBASE_HOME/bin/hbase-start.sh

[root@37 /usr/local/bin/hbase]bin/start-hbase.sh

starting master, logging to /usr/local/bin/hbase/bin/../logs/hbase-root-master-37.c3.33.static.xlhost.com.out

slave: starting regionserver, logging to /usr/local/bin/hbase/bin/../logs/hbase-root-regionserver-38.c3.33.static.xlhost.com.out

master: starting regionserver, logging to /usr/local/bin/hbase/bin/../logs/hbase-root-regionserver-37.c3.33.static.xlhost.com.out

[root@37 /usr/local/bin/hbase]jps

30362 SecondaryNameNode

30455 JobTracker

30570 TaskTracker

30231 DataNode

32003 Jps

31772 HMaster

30115 NameNode

31919 HRegionServer

On slave you suppose to see the following output:

[root@38 /usr/local/bin/hbase]jps

513 DataNode

6360 Jps

6214 HRegionServer

628 TaskTracker

2 comments:

This blog is full of Innovative ideas.surely i will look into this insight.please add more information's like this soon.

Hadoop Training in Chennai

Big data training in chennai

Hadoop Training in Anna Nagar

JAVA Training in Chennai

Python Training in Chennai

Selenium Training in Chennai

Hadoop training in chennai

Big data training in chennai

big data course in chennai

very nice article,keep sharing more articles with us.

thank you...

big data hadoop training

hadoop administration training

Post a Comment